👀 This is our MSTI Launch Project, conducted in collaboration with the Make4All Group, advised by Jerry Cao (PhD student) and Jennifer Mankoff(PI), along with Ruiqing Wang, Sam Wong, and Ying He. I served as the lead for the MSTI team. The project focused on improving eyedrop self-administration for individuals with motor and/or visual disabilities.

In this project, I was responsible for all hardware prototyping and assisted in developing the computer vision pipeline to detect successful eyedrop administration, as well as conducting user studies.

🔆 Highlights

- Conducted interviews with eyedrop users with low vision and motor disabilities to understand their needs and challenges.

- Developed multiple prototypes based on user feedback and evolving design requirements.

- Integrated key features into the final prototype, including: 1️⃣ aiming assistance 2️⃣ automatic eyedrop dispensing 3️⃣ feedback on successful self-administration.

We conducted interviews with 15 low vision and motor disabilities to understand eyedrop challenges and performed thematic analysis on the transcripts.

We found several key challenges in eyedrop self-administration.

- Aiming: Older adults with low vision or reduced dexterity often struggle to align the eyedrop and keep stable with the eye.

- Squeezing: Limited hand strength or arthritis hinders elderly individuals from squeezing eye drop bottles effectively.

- Uncertainty: Uncertainty in drop application, only relying on sensation, often leading to over- or under-dosing.

We also found that existing eyedrop aids fail to address these challenges simultaneously.

In response, I developed following prototypes:

To address the squeezing challenge, I developed a ratchet mechanism that converts a single squeeze into smaller, incremental steps of actuation.

⬆️ this is how it works

However, to achieve smaller actuation steps, the 3D-printed ratchet teeth needed to be very fine, which caused them to slip under load (e.g., when pressing the eyedrop bottle).

To address this issue, I redesigned the mechanism with concentric circular stages. As motion shifts from the outer to the inner radius, the linear displacement per rotation decreases proportionally to the radius, achieving finer actuation steps. This configuration also enables the outer ratchet teeth to be larger and mechanically stronger, reducing the likelihood of slipping or deformation under load.

However, since the step forward is discrete, it's challenging to control the eyedrop to dispense the exact desired amount.

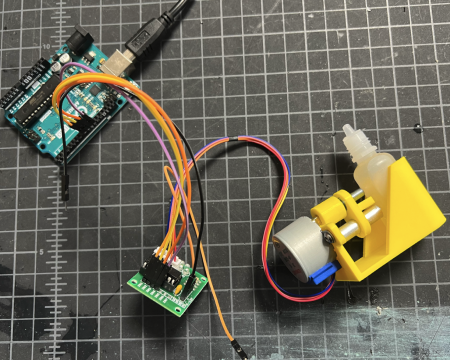

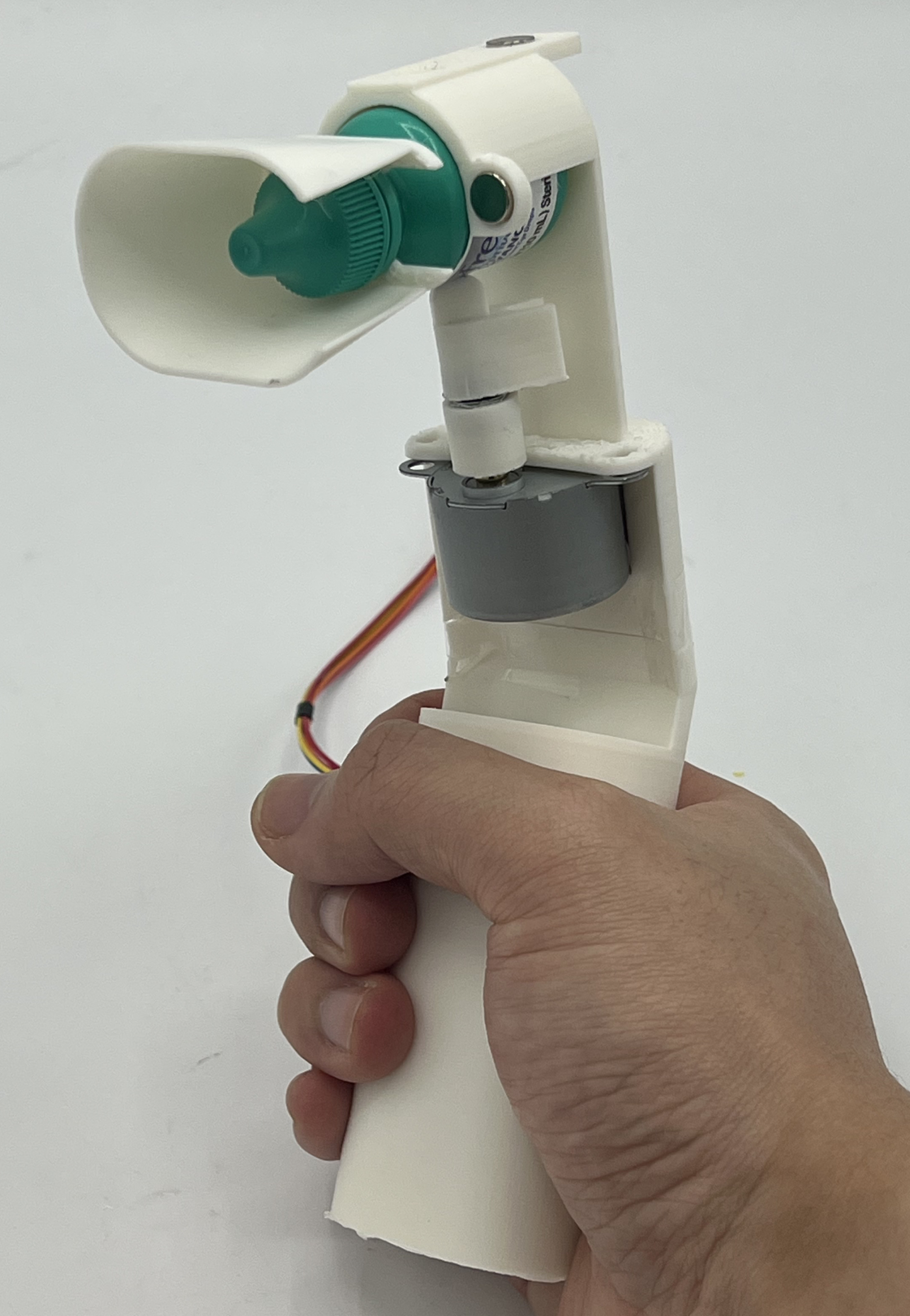

To enable continuous squeezing, I incorporated a stepper motor-driven actuation system capable of precisely controlling displacement.

I integrated the motorized squeezing mechanism into a handheld device that also provides aiming assistance through a cap that rests around the eye for alignment.

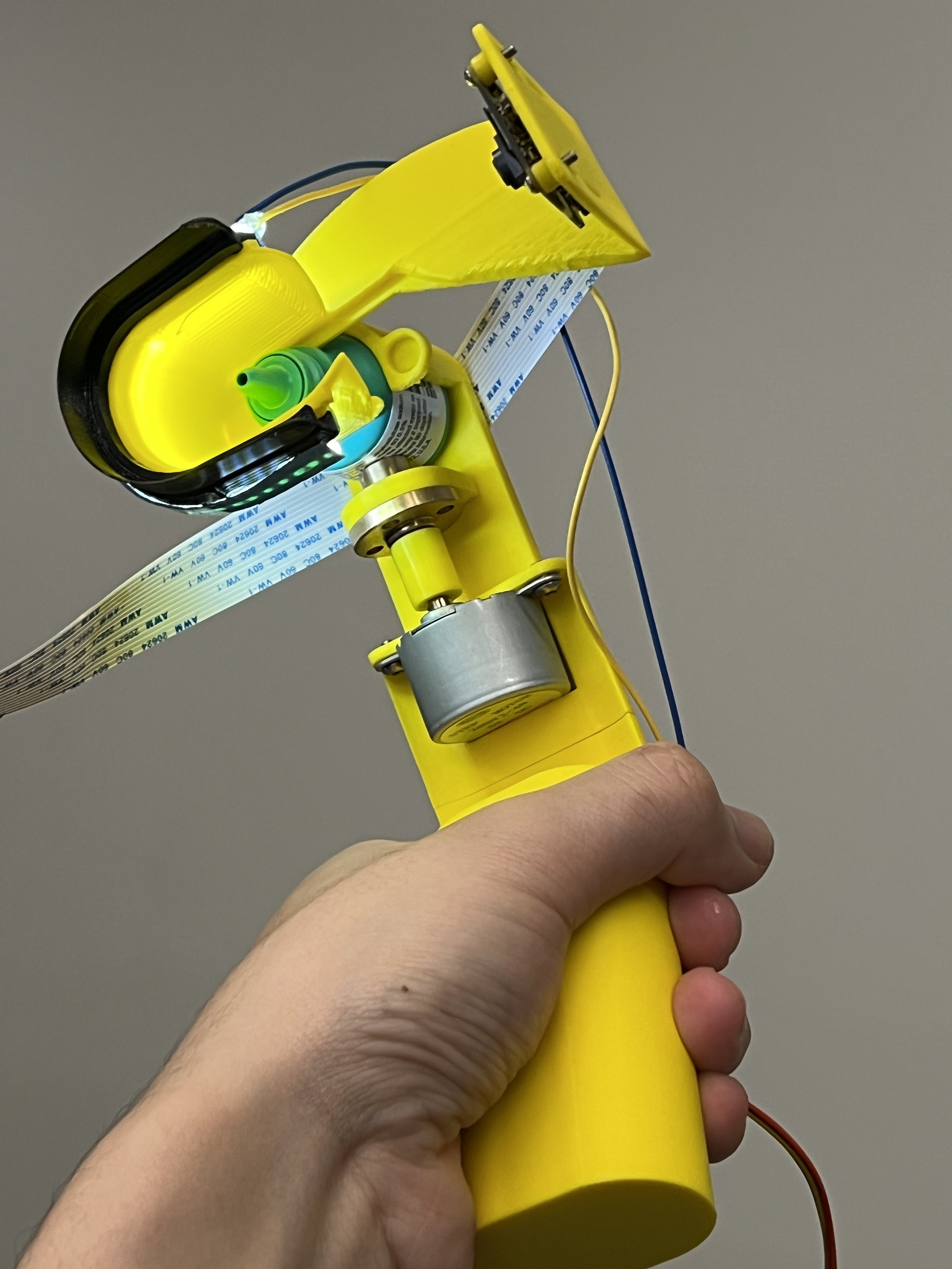

In a later iteration, I added a camera (connected to a Raspberry Pi) to the device to monitor whether the eyedrop was successfully dispensed into the eye.

I used Otsu thresholding to detect the presence of an eyedrop in the camera feed, enabling a closed-loop system that automatically dispenses a single drop each time the user presses the trigger button.

I also applied Canny edge detection to identify the border of the eyedrop and fitted a circle to the extracted edges to track the drop’s trajectory. When the device is properly aligned with the eye, successful administration is detected when the drop’s trajectory intersects the eye area.

Check out our video!

Explore the full poster below: