OmniSurface @ Yuecheng, Wanling, Yi

🫳 This is an IoT device capable of turning passive surfaces into interactive inputs. It can sense different gestures on various surfaces, e.g. knocking, tapping, fist bumping, etc. It can also sense different objects that are interacting with the surface, e.g. interact with hands, pencils, mugs, etc.

🔆 Highlights

- Highly integrated hardware design; software architecture fully functional and scalable on the Azure Cloud.

- Customized gestures: users can define their own gestures, and add new data to the training dataset to customize their machine learning classifier.

- We envision this novel input can be useful in promoting accessibility, e.g. people who cannot perform accurate gestures on traditional tangible interfaces (e.g. rotate a knob) might find it easier to interact with a larger surface with possible higher tolerance. It also has potential applications in the gaming industry.

⚠️ Limitations

- The accuracy of the classifier is highly dependent on what gestures users define and the data size. More research should be done to improve the accuracy on a small training dataset.

- Research should also be done to optimize the hardware: do we need two different sensors? Does adding a higher sampling rate and resolution ADC help to improve the ML accuracy?

🔗 Github: https://github.com/OmniSurface

🍾 This is the final project of the TECHIN 515 course @ GIX, UW. This is a group project in collaboration with Wanling and Yi. I was responsible for the design concept iteration, hardware implementation and cloud service development.

The software pipeline incorporates the control over a smart home device (a lamp with different colors). Different gestures can be used to turn on/off different colors of the LED.

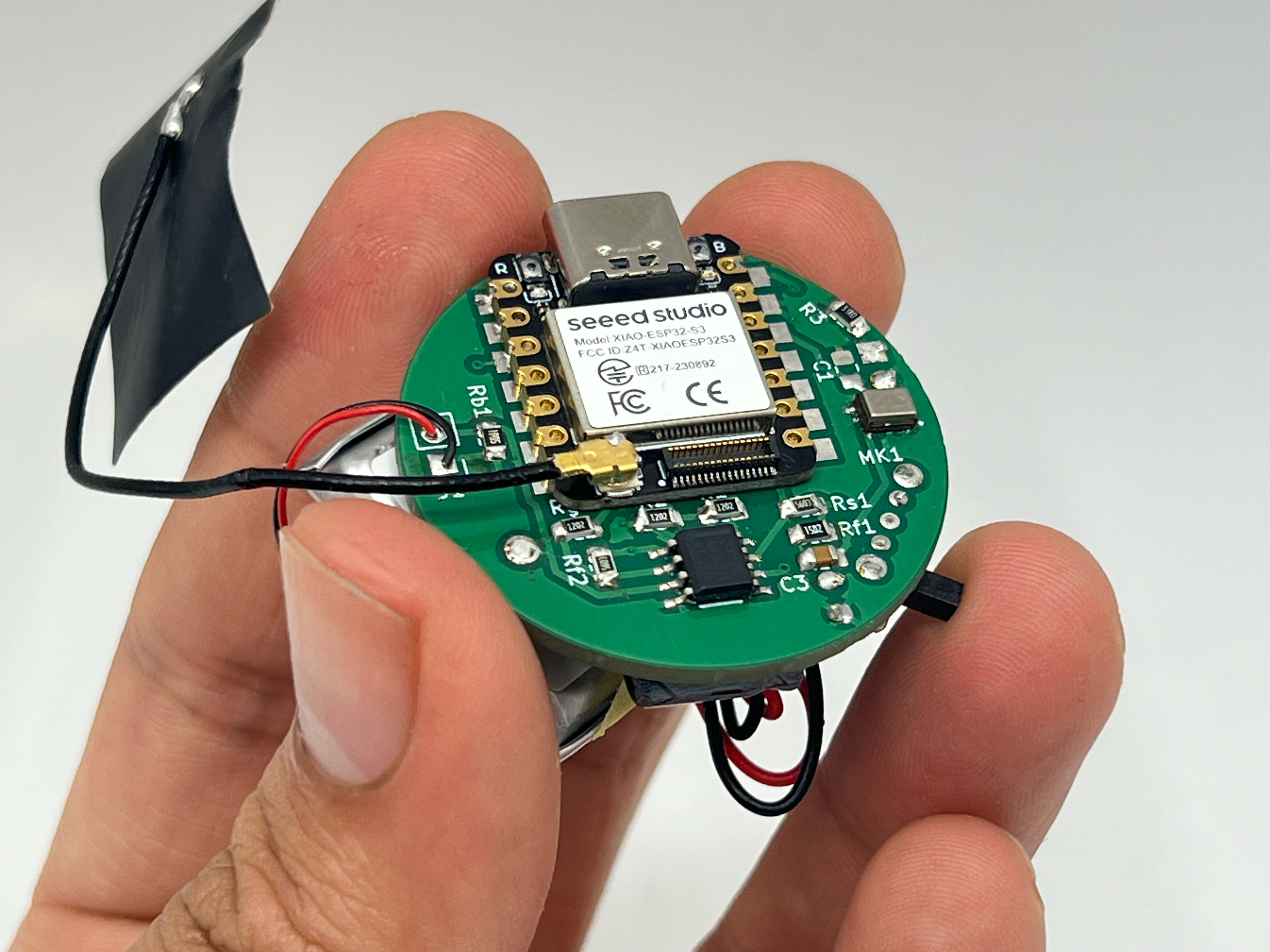

The combination use of piezoelectric sensors and bone conduction MEMS microphones can pick up both obvious and subtle vibrations on the surface. The data collected from both sensors will be amplified by a dual-channel amplifier and then fed into the ESP32S3, which will send the data to the Azure Funcion App for further processing & classification.

The mockup smart home lamp is controlled by ESP32S3 and Blynk.